Advanced Parsing of Nested Dynamic Data Structures using Kusto Query Language (KQL)

Parsing these structures at scale can be tricky, especially when dealing with multi-level nesting, varying schemas, or malformed entries.

In the world of big data analytics, handling complex, nested data structures like JSON objects, arrays, and property bags is a common challenge. Kusto Query Language (KQL), the powerful querying tool behind Azure Data Explorer, Azure Monitor, and Microsoft Sentinel, excels at processing large-scale datasets efficiently. While KQL is often associated with security analytics, its capabilities extend far beyond—making it ideal for IoT telemetry, application logs, and other non-security contexts where data arrives in irregular, dynamic formats. However, parsing these structures at scale can be tricky, especially when dealing with multi-level nesting, varying schemas, or malformed entries.

This blog post dives into advanced techniques for parsing nested dynamic data in KQL. We'll explore the mv-apply operator for multi-level array expansions and transformations, and then cover mv-expand combined with conditional logic using iif() or case() for handling irregular structures, including strategies for error-handling malformed data. I'll include practical examples to illustrate these concepts, drawing from real-world scenarios like device telemetry or log analysis.

Deep Dive into mv-apply: Multi-Level Array Expansions and Transformations

The mv-apply operator is a powerhouse for applying subqueries to elements within dynamic arrays or property bags on a per-record basis. It essentially expands the array (similar to mv-expand), applies a custom subquery to each element, and unions the results back into the output. This is particularly useful for transformations like finding top values, aggregating subsets, or performing calculations across nested arrays without exploding the entire dataset prematurely.

Key Features and Syntax

Purpose: Applies a tabular subquery to each expanded element of an array, allowing for filtering, sorting, or aggregation within the context of each original record.

Syntax: T | mv-apply [with_itemindex = IndexColumnName] ColumnsToExpand [limit RowLimit] on (SubQuery)

Parameters:

ColumnsToExpand: List of array expressions to expand (e.g., Arr to typeof(long)).

RowLimit: Caps the number of rows per input record.

SubQuery: The logic applied to each expanded subtable.

Internally, mv-apply leverages mv-expand for expansion, preserves array order, and repeats non-expanded columns as needed. It's great for multi-level nesting because you can chain multiple mv-apply calls or nest subqueries.

Examples

Let's start with a simple dataset of device readings with nested arrays.

Finding the Largest Element in Nested Arrays Suppose you have a table with device IDs and an array of temperature readings:

let data = datatable (DeviceId: string, Readings: dynamic)

[

"Device1", dynamic([25, 30, 28]),

"Device2", dynamic([40, 35, 45])

];

data

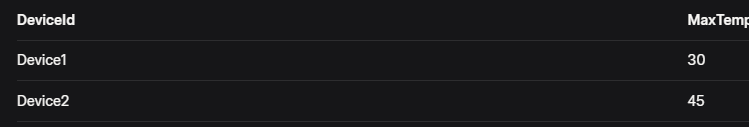

| mv-apply MaxTemp=Readings to typeof(long) on

(

top 1 by MaxTemp desc

)This expands the Readings array, finds the top (largest) value per device, and outputs:

It's efficient for scale because the subquery runs per record, avoiding full dataset explosion.

Multi-Level Expansion: Top Two Elements with Aggregation For deeper nesting, say an array of sensor objects:

let data = datatable (DeviceId: string, Sensors: dynamic)

[

"Device1", dynamic([{"Temp": [25, 30]}, {"Temp": [28, 32]}]),

"Device2", dynamic([{"Temp": [40, 35]}, {"Temp": [45, 38]}])

];

data

| mv-apply Sensor=Sensors on

(

mv-apply TempArray=Sensor.Temp to typeof(long) on

(

top 2 by TempArray desc

| summarize TopTemps=make_list(TempArray)

)

)This performs a nested expansion: first on sensors, then on each temp array, aggregating the top two temps per sensor. Output might include summarized lists like TopTemps: [30, 28] per sensor per device. This handles multi-level arrays by chaining mv-apply, transforming data without losing context.

Tips for Scale: Use limit to prevent excessive row generation in large arrays. For transformations, combine with functions like summarize or make_list to re-aggregate results, keeping query performance high in big datasets.

Parsing Irregular Nested Structures with mv-expand and Conditional Logic

While mv-apply is transformation-focused, mv-expand is your go-to for flattening irregular nested structures into multiple records. It expands dynamic arrays or bags, duplicating other columns, and is perfect for irregular data where array lengths vary or structures are inconsistent. Pair it with conditional logic via iif() (simple if-then-else) or case() (multi-condition switch) to handle variations, and incorporate error-handling for malformed entries.

mv-expand Basics

Purpose: Turns a single record with an array/bag into multiple records, one per element.

Syntax: T | mv-expand [kind=(bag | array)] [with_itemindex=Index] Column [to typeof(Type)] [limit Limit]

Modes: bag for property bags (default), array for key-value pairs.

For irregular nesting, mv-expand shines when schemas differ across records—e.g., some have arrays, others bags or nulls.

Combining with Conditional Logic

Use iif() for binary decisions: iif(condition, then, else). Use case() for multiple branches: case(predicate1, then1, predicate2, then2, ..., else).

Error-Handling for Malformed Data

KQL's parse_json() (or todynamic()) is key for ingestion: it converts strings to dynamic, returning the original string as dynamic if malformed—no errors thrown. Check with isnotnull() or typeof() and use conditionals to skip or default bad data. For nested malformed JSON, nest parse_json(tostring(...)).

Examples

Flattening Irregular Arrays with iif() for Defaults Consider logs with optional nested error arrays:

let logs = datatable (LogId: int, Details: dynamic)

[

1, dynamic({"Errors": ["Err1", "Err2"], "Status": "OK"}),

2, dynamic({"Errors": null, "Status": "Failed"})

];

logs

| mv-expand Error=Details.Errors

| extend SafeError = iif(isnotnull(Error), tostring(Error), "No Error")

| project LogId, SafeError, Status=Details.StatusThis expands Errors (handling nulls by producing no extra rows), uses iif() to default missing errors, and outputs flattened records. For malformed JSON in Details, wrap in parse_json first.

Multi-Condition Parsing with case() for Irregular Bags For bags with varying properties:

let data = datatable (Id: int, Props: dynamic)

[

1, dynamic({"Type": "A", "Value": 10}),

2, dynamic({"Type": "B", "Value": "High"}),

3, dynamic({"Malformed": "???"})

];

data

| mv-expand kind=bag Prop=Props

| extend Categorized = case(

Prop.Type == "A", strcat("Numeric: ", Prop.Value),

Prop.Type == "B", strcat("Level: ", Prop.Value),

"Unknown"

)

| where Categorized != "Unknown" // Filter malformedExpands bags to key-value pairs, uses case() to categorize based on type, defaults unknowns (e.g., malformed). This handles irregularity by conditionally transforming.

Nested Parsing with Error-Handling For deeply nested JSON:

let nestedData = datatable (Record: string)

[

'{"Outer": "{\"Inner\": [1,2]}" }'

];

nestedData

| extend ParsedOuter = parse_json(Record)

| extend ParsedInner = parse_json(tostring(ParsedOuter.Outer)).Inner

| mv-expand Value = ParsedInner

| extend ValidValue = iif(typeof(Value) == "long", Value, 0) // Handle type errorsDouble-parses nested strings, expands the inner array, and uses iif() for type checks. If malformed, parse_json returns the string, which iif() can default.

Tips: Always parse early with parse_json, use coalesce() for nulls, and test with small sets. For scale, filter before expanding to avoid performance hits.

TLDR

Mastering advanced parsing in KQL unlocks efficient handling of complex, nested data at scale—whether in security or beyond. mv-apply empowers transformations across multi-level arrays, while mv-expand with iif()/case() tames irregular structures, complete with built-in error tolerance via parse_json. These techniques reduce query complexity and boost performance in large datasets. Experiment with your own data in Azure Data Explorer, and remember: start simple, layer conditionals, and always monitor for scalability. Happy querying!