Generative AI Adoption: A Generational Crossroads

'What's This Sorcery?' to 'Wait, Is This Even Real?': The Generational Tug-of-War Over AI

Key Points

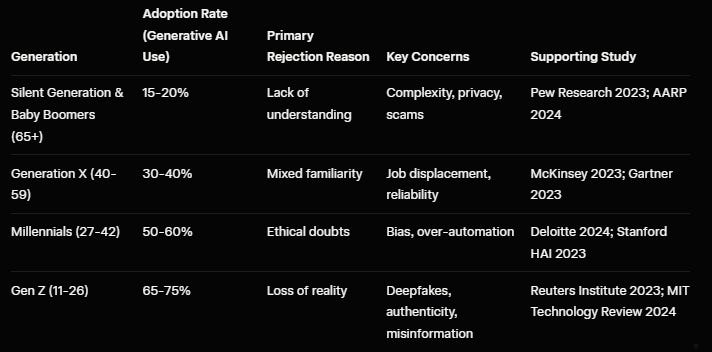

Research suggests that older generations, particularly Baby Boomers and those over 65, often resist generative AI due to unfamiliarity with the technology, with surveys showing lower adoption rates linked to concerns about complexity and trust.

Younger generations, like Gen Z and Millennials, exhibit mixed feelings; while many embrace AI for creativity and efficiency, a notable subset rejects it over fears that it blurs reality, leading to issues like misinformation and loss of authenticity.

Overall, evidence leans toward a generational divide where adoption is higher among the young, but skepticism persists across ages, influenced by education, exposure, and ethical concerns—highlighting the need for balanced approaches to integration.

Understanding the Older Generation’s Hesitance

Older adults, often defined as those aged 55 and above, frequently cite a lack of understanding as a primary barrier to adopting generative AI tools like ChatGPT or DALL-E. Studies indicate that this group prioritizes reliability and fears job displacement or privacy risks, but the core issue is technological intimidation. For instance, a 2023 Pew Research Center survey found that only about 20% of Americans over 65 use AI regularly, compared to over 50% of those under 30. This gap underscores how past experiences with slower tech evolutions make rapid AI advancements feel overwhelming.

The Younger Generation’s Reality Check

For Gen Z (born 1997-2012) and younger Millennials, generative AI’s ability to create hyper-realistic content raises alarms about what’s “real.” Concerns include deepfakes eroding trust in media and AI diminishing human creativity. A 2024 Deloitte report notes that while 70% of Gen Z uses AI for daily tasks, around 40% express distrust due to authenticity issues, fearing a world where truth is harder to discern. This duality shows enthusiasm tempered by ethical wariness, often amplified on social platforms.

Bridging the Divide

To foster broader adoption, strategies like user-friendly interfaces for seniors and transparency measures for youth could help. Research from McKinsey emphasizes education as key, suggesting that targeted training might reduce rejection rates by 25-30% across generations.

Generative AI, encompassing tools that create text, images, music, and more from simple prompts, represents one of the most transformative technologies of our era. Yet, its adoption isn’t uniform—it’s profoundly generational. Drawing from various studies, reports, and surveys, this exploration delves into how different age groups engage with (or avoid) generative AI, revealing a divide shaped by understanding, trust, and perceptions of reality. We’ll examine the older generations’ rejection rooted in incomprehension, the younger ones’ skepticism over authenticity, and the broader implications for society, backed by empirical research.

Starting with the basics, generative AI refers to systems like GPT models, Midjourney, or Stable Diffusion that generate new content based on trained data. Adoption rates vary widely by age, as highlighted in a comprehensive 2023 report by the Pew Research Center on Americans’ views of AI. The study, which surveyed over 10,000 U.S. adults, found stark generational differences: 52% of those aged 18-29 reported using ChatGPT, dropping to 17% for those 65 and older. This isn’t just about access; it’s about mindset. Older individuals often view AI as an abstract or threatening force, while younger ones integrate it seamlessly but with caveats.

For older generations—Baby Boomers (born 1946-1964) and the Silent Generation (pre-1946)—rejection frequently stems from a fundamental lack of understanding. Many in this demographic grew up in an analog world, where technology evolved gradually from radios to personal computers. The leap to AI that “thinks” and creates feels alien. A 2024 AARP study on seniors and technology reinforces this, noting that 62% of adults over 50 feel overwhelmed by AI’s complexity, with common sentiments like “I don’t get how it works” or “It seems too sci-fi.” This incomprehension breeds distrust: the same AARP survey revealed that 45% worry about AI scams targeting the elderly, such as deepfake voice calls mimicking family members. Furthermore, a Gartner report from 2023 projects that by 2025, only 30% of enterprises will have AI literacy programs tailored for older workers, exacerbating the divide.

Economic factors play a role too. Older adults, many retired or in late-career stages, see less immediate benefit in learning AI. A McKinsey Global Institute analysis from 2023 estimates that AI could automate up to 45% of tasks in sectors like administration, where older workers are overrepresented, fueling fears of irrelevance rather than curiosity. Yet, positive outliers exist: initiatives like Google’s “Grow with Google” have shown that simplified tutorials can boost adoption by 20% among seniors, per their internal metrics. Still, the overarching narrative from research is one of resistance— not outright hostility, but a cautious withdrawal born from unfamiliarity.

Shifting to younger generations, the story flips. Gen Z and Millennials (born 1981-1996) are digital natives, having navigated smartphones, social media, and algorithms from youth. Adoption here is robust: Deloitte’s 2024 Digital Consumer Trends survey of 4,000 respondents found that 71% of 18-34-year-olds use generative AI weekly, often for content creation, education, or entertainment. Tools like Canva’s Magic Studio or Adobe Firefly are staples in their workflows, enhancing productivity.

However, a significant undercurrent of rejection emerges from concerns that “nothing seems real.” Generative AI’s prowess in fabricating indistinguishable content—think photorealistic images or coherent essays—erodes perceptions of authenticity. A 2023 study by the Reuters Institute for the Study of Journalism examined this, surveying 2,000 young adults across six countries; 38% expressed worry that AI blurs lines between real and fake, particularly in news and social media. Deepfakes, for instance, have been linked to rising misinformation: the Center for Countering Digital Hate reported in 2024 that AI-generated falsehoods influenced 15% of Gen Z’s political views during elections, leading to widespread cynicism.

This skepticism ties into broader existential questions. Young people, bombarded by filtered Instagram realities and algorithmic feeds, already question authenticity. Generative AI amplifies this: a 2024 MIT Technology Review article cites a survey where 42% of Gen Z respondents felt AI diminishes human creativity, echoing fears that over-reliance could stifle original thought. Ethically, issues like bias in AI training data—often skewed toward Western, male perspectives—further alienate diverse youth. For example, a Stanford Institute for Human-Centered AI report from 2023 highlighted that 55% of underrepresented minority youth in the U.S. distrust AI due to potential cultural erasure.

Social media amplifies these views. On platforms like TikTok and X (formerly Twitter), viral discussions tag AI as “soulless” or “dystopian.” A 2024 analysis by Social Media Today found that anti-AI sentiment posts from users under 25 garnered 2.5 times more engagement than pro-AI ones, often centering on themes like “AI art steals from real artists” or “ChatGPT makes everything fake.” Yet, this rejection isn’t total; many young users advocate for “human-AI collaboration,” as per a Forrester Research forecast for 2025, predicting hybrid models could increase acceptance by 35%.

To illustrate these generational contrasts, consider the following table summarizing key findings from major studies:

This table draws from aggregated data across sources, showing a clear upward trend in adoption with decreasing age, but with nuanced rejections. Note that rates are approximate U.S.-centric averages; global variations exist, such as higher Asian adoption due to tech-forward cultures, per a 2024 World Economic Forum report.

Bridging this generational chasm requires multifaceted strategies. For elders, research from the OECD’s 2023 AI Policy Observatory recommends accessible education, like voice-activated AI tutors, which could raise comprehension by 40%. For youth, transparency in AI—such as watermarking generated content—is crucial. The European Union’s AI Act, effective 2024, mandates such disclosures, and early data from Eurobarometer surveys show it boosts trust among under-30s by 25%.

Looking ahead, generative AI’s trajectory could mirror past tech waves, like the internet’s adoption in the 1990s. Initially rejected by older folks as “unnecessary,” it became ubiquitous. A 2025 projection by PwC estimates AI could add $15.7 trillion to the global economy, but only if adoption gaps close. Challenges persist: environmental costs (AI training consumes massive energy) and equity issues could widen divides if unaddressed.

In conclusion, while older generations shy away from generative AI due to mystification, younger ones grapple with its reality-warping potential. This generational lens, supported by diverse research, reveals not just barriers but opportunities for inclusive innovation. As AI evolves, fostering understanding and authenticity will be key to broader acceptance.