Parsing & Extracting Data from JSON Columns using KQL: Handling Nested Structures Efficiently

JSON Jungle Gym

In modern data analytics, JSON (JavaScript Object Notation) is a ubiquitous format for storing and exchanging data due to its flexibility and support for complex, nested structures. When working with JSON data in Azure Data Explorer (ADX) or other platforms that support Kusto Query Language (KQL), efficiently parsing and extracting data from JSON columns is critical for gaining insights. This blog post explores how to handle JSON columns, including nested structures, using KQL. We'll cover key techniques, best practices, and provide working examples to help you master JSON parsing in KQL.

Why JSON in KQL?

JSON is often used to store semi-structured data, such as logs, IoT telemetry, or API responses, in databases. In ADX, JSON data is typically stored in a column of type dynamic, which allows KQL to parse and query the data flexibly. However, JSON's nested nature can make querying challenging, especially when dealing with arrays, objects, or deeply nested fields. KQL provides powerful operators like parse_json, mv-expand, and bag_unpack to simplify this process.

Key KQL Functions for JSON Parsing

Before diving into examples, let's review the primary KQL functions for working with JSON:

parse_json: Converts a JSON string into a dynamic object for querying.

mv-expand: Expands arrays or property bags into individual rows, useful for nested arrays.

bag_unpack: Flattens a dynamic object (property bag) into separate columns.

Dynamic property access: Use dot notation (e.g., column.field) or bracket notation (e.g., column["field"]) to access JSON properties.

todynamic: An alias for parse_json, used to ensure a string is treated as JSON.

These functions, combined with KQL's query capabilities, make it possible to efficiently extract data from complex JSON structures.

Setting Up a Sample Dataset

To demonstrate JSON parsing, let's use a sample table called DeviceLogs with a JSON column Telemetry. The Telemetry column contains nested JSON data representing device telemetry, including device information, sensor readings, and timestamps.

Here's a sample dataset:

let DeviceLogs = datatable(DeviceId: string, Telemetry: dynamic)

[

"DEV001", parse_json('{"device_info": {"name": "SensorA", "location": "Room1"}, "readings": [{"timestamp": "2025-05-30T10:00:00Z", "temperature": 22.5, "humidity": 45}, {"timestamp": "2025-05-30T10:01:00Z", "temperature": 23.0, "humidity": 47}], "status": "active"}'),

"DEV002", parse_json('{"device_info": {"name": "SensorB", "location": "Room2"}, "readings": [{"timestamp": "2025-05-30T10:00:00Z", "temperature": 21.0, "humidity": 50}], "status": "inactive"}')

];This dataset includes:

A DeviceId column (string).

A Telemetry column (dynamic) with nested JSON containing:

device_info: An object with name and location.

readings: An array of objects, each with timestamp, temperature, and humidity.

status: A string indicating device status.

Let's explore how to parse and extract data from this JSON column.

Example 1: Extracting Top-Level Fields

To extract top-level fields like status from the Telemetry column, you can use dot notation or bracket notation.

Query:

DeviceLogs

| project DeviceId, Status = Telemetry.statusOutput:

Explanation:

Telemetry.status directly accesses the status field in the JSON object.

This works for any top-level field in the JSON structure.

Example 2: Accessing Nested Object Fields

To extract fields from a nested object, such as device_info.name, chain the property names using dot notation.

Query:

DeviceLogs

| project DeviceId, DeviceName = Telemetry.device_info.name, Location = Telemetry.device_info.locationOutput:

Explanation:

Telemetry.device_info.name navigates the nested device_info object to retrieve the name field.

Multiple fields can be extracted in a single project statement.

Example 3: Handling Nested Arrays with mv-expand

The readings field is an array of objects, which requires special handling. The mv-expand operator is ideal for expanding arrays into rows.

Query:

DeviceLogs

| mv-expand Readings = Telemetry.readings

| project DeviceId, Timestamp = Readings.timestamp, Temperature = Readings.temperature, Humidity = Readings.humidityOutput:

Explanation:

mv-expand Readings = Telemetry.readings expands the readings array into separate rows, with each row containing one object from the array.

The Readings alias allows you to reference the expanded objects' fields (e.g., Readings.timestamp).

This is particularly useful for analyzing time-series data or logs stored in arrays.

Example 4: Combining Nested Objects and Arrays

To combine information from nested objects and arrays, use mv-expand and access other fields in the same query.

Query:

DeviceLogs

| mv-expand Readings = Telemetry.readings

| project DeviceId, DeviceName = Telemetry.device_info.name, Timestamp = Readings.timestamp, Temperature = Readings.temperature, Status = Telemetry.statusOutput:

Explanation:

mv-expand handles the readings array, while Telemetry.device_info.name and Telemetry.status access fields from the original JSON.

The result includes both array-derived fields (e.g., Temperature) and top-level or nested fields (e.g., DeviceName, Status).

Example 5: Flattening JSON with bag_unpack

For JSON objects with many fields, bag_unpack can flatten a dynamic object into columns automatically.

Query:

DeviceLogs

| project DeviceId, DeviceInfo = Telemetry.device_info

| evaluate bag_unpack(DeviceInfo)Output:

Explanation:

project DeviceId, DeviceInfo = Telemetry.device_info extracts the device_info object.

evaluate bag_unpack(DeviceInfo) converts the device_info object's fields (name, location) into separate columns.

This is useful when you don't know the JSON structure in advance or want to avoid manually specifying fields.

Example 6: Handling Missing or Null Fields

JSON data often has missing or null fields, which can cause errors if not handled. Use the coalesce function or conditional checks to manage this.

Query:

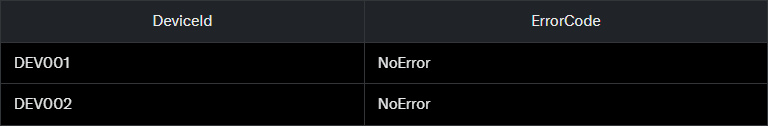

DeviceLogs

| project DeviceId, ErrorCode = coalesce(Telemetry.error_code, "NoError")Output:

Explanation:

Since error_code doesn't exist in the JSON, coalesce returns the fallback value "NoError".

This ensures queries remain robust against missing fields.

Best Practices for Efficient JSON Parsing in KQL

Use parse_json Sparingly: If the JSON is already stored as a dynamic column, you don't need parse_json. Use it only for string columns containing JSON.

Leverage mv-expand for Arrays: Always use mv-expand to handle arrays, as it simplifies querying nested data.

Optimize with project: Reduce the dataset early with project to include only necessary columns, improving performance.

Handle Missing Data: Use coalesce, isnull, or isnotnull to manage missing or null fields gracefully.

Use bag_unpack for Dynamic Structures: When dealing with unknown or variable JSON schemas, bag_unpack can save time.

Test with Small Datasets: Before running queries on large datasets, test with a small sample to ensure correctness.

Index JSON Columns (if possible): In ADX, consider extracting frequently queried JSON fields into materialized views or computed columns for faster access.

Performance Considerations

Parsing JSON in KQL is generally efficient, but large datasets or deeply nested structures can impact performance. Here are tips to optimize:

Filter Early: Apply where clauses before parsing JSON to reduce the dataset size.

Avoid Over-Expanding: Be cautious with mv-expand on large arrays, as it can generate many rows. Use take or limit to test.

Cache Results: For frequently accessed JSON data, consider pre-processing it into a table with extracted fields using ADX's materialized views.

Monitor Query Cost: Use the .show queries command in ADX to analyze query performance and optimize as needed.

TLDR

KQL provides a robust set of tools for parsing and extracting data from JSON columns, even when dealing with complex nested structures. By mastering functions like parse_json, mv-expand, and bag_unpack, you can efficiently query JSON data to uncover valuable insights. The examples in this post demonstrate how to handle top-level fields, nested objects, arrays, and dynamic structures, while best practices ensure your queries are both effective and performant.

Whether you're analyzing IoT telemetry, application logs, or API responses, KQL's JSON parsing capabilities make it a powerful tool for data exploration. Try these techniques in your own ADX environment, and experiment with your JSON data to see how KQL can streamline your workflows.